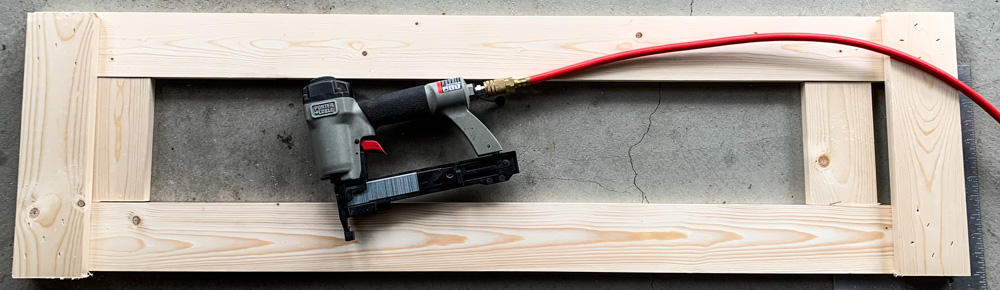

Constructing crates for the existing Marineland 60g and JBJ Nano Cube 28g; although I don't have any plans to reuse these tanks, they will have to get moved to AZ since the fish and corals are coming out just before we're ready to hit the road.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

neoGeorge's Build: One Reef & One Planted Freshwater

- Build Thread

- Thread starter neoGeorge

- Start date

- Tagged users None

Accidents Don’t Just Happen—They Have to Happen

Our very attempts to stave off disaster make unpredictable outcomes more likely.

Read in The Atlantic: https://apple.news/AxDIeJCtxTKKBNhPSWC_K6w

Something to keep in mind as we work to prevent disasters in our reef tanks. The following is quoted from The Atlantic article:

"Accidents are part of life. So are catastrophes. Two of Boeing’s new 737 Max 8 jetliners, arguably the most modern of modern aircraft, crashed in the space of less than five months. A cathedral whose construction started in the 12th century burned before our eyes, despite explicit fire safety procedures and the presence of an on-site firefighter and a security agent. If Notre Dame stood for so many centuries, why did safeguards unavailable to prior generations fail? How did modernizing the venerable Boeing 737 result in two horrific crashes, even as, on average, air travel is safer than ever before?

These are questions for investigators and committees. They are also fodder for accident theorists. Take Charles Perrow, a sociologist who published an account of accidents occurring in human-machine systems in 1984. Now something of a cult classic, Normal Accidents made a case for the obvious: Accidents happen. What he meant is that they must happen. Worse, according to Perrow, there’s a humbling cautionary tale lurking in complicated systems: Our very attempts to stave off disaster by introducing safety systems ultimately increases the overall complexity of the systems, ensuring that some unpredictable outcome will rear its ugly head no matter what. Complicated human-machine systems might surprise us with outcomes more favorable than we had any reason to expect. They also might shock us with catastrophe.

When disaster strikes, past experience has conditioned the public to assume that hardware upgrades or software patches will solve the underlying problem. This indomitable faith in technology is hard to challenge—what else solves complicated problems? But sometimes our attempts to banish accidents make things worse.

...

What makes the Boeing disaster so frustrating is the relative obviousness of the problem in retrospect. Psychologists and economists have a term for this; it’s called the “hindsight bias,” the tendency to see causes of prior events as obvious and predictable, even when the world has no clue leading up to them. Without the benefit of hindsight, the complex causal sequences leading to catastrophe are sometimes impossible to foresee. But in light of recent tragedy, theorists like Perrow would have us try harder anyway. Trade-offs in engineering decisions necessitate an eternal vigilance against the unforeseen. If some accidents are a tangle of unpredictability, we’d better spend more time thinking through our designs and decisions—and factoring in the risks that arise from complexity itself.

...

The increasing complexity of modern human-machine systems means that, depressingly, unforeseen failures are typically large-scale and catastrophic. The collapse of the real estate market in 2008 could not have happened without derivatives designed not to amplify financial risk, but to help traders control it. Boeing would never have put the 737 Max’s engines where it did, but for the possibility of anti-stall software making the design “safe.”

In response to these risks, we play the averages. Overall, air travel is safer today than in, say, the 1980s. Centuries old cathedrals don’t burn, on average, and planes don't crash. Stock markets don’t, either. On average, things usually work. But our recent sadness forces a reminder that future catastrophes require more attention to the bizarre and (paradoxically) to the unforeseen. Our thinking about accidents and tragedies has to evolve, like the systems we design. Perhaps we are capable of outsmarting complexity more often. Sometimes, though, our recognition of what we’ve done will still come too late."

Our very attempts to stave off disaster make unpredictable outcomes more likely.

Read in The Atlantic: https://apple.news/AxDIeJCtxTKKBNhPSWC_K6w

Something to keep in mind as we work to prevent disasters in our reef tanks. The following is quoted from The Atlantic article:

"Accidents are part of life. So are catastrophes. Two of Boeing’s new 737 Max 8 jetliners, arguably the most modern of modern aircraft, crashed in the space of less than five months. A cathedral whose construction started in the 12th century burned before our eyes, despite explicit fire safety procedures and the presence of an on-site firefighter and a security agent. If Notre Dame stood for so many centuries, why did safeguards unavailable to prior generations fail? How did modernizing the venerable Boeing 737 result in two horrific crashes, even as, on average, air travel is safer than ever before?

These are questions for investigators and committees. They are also fodder for accident theorists. Take Charles Perrow, a sociologist who published an account of accidents occurring in human-machine systems in 1984. Now something of a cult classic, Normal Accidents made a case for the obvious: Accidents happen. What he meant is that they must happen. Worse, according to Perrow, there’s a humbling cautionary tale lurking in complicated systems: Our very attempts to stave off disaster by introducing safety systems ultimately increases the overall complexity of the systems, ensuring that some unpredictable outcome will rear its ugly head no matter what. Complicated human-machine systems might surprise us with outcomes more favorable than we had any reason to expect. They also might shock us with catastrophe.

When disaster strikes, past experience has conditioned the public to assume that hardware upgrades or software patches will solve the underlying problem. This indomitable faith in technology is hard to challenge—what else solves complicated problems? But sometimes our attempts to banish accidents make things worse.

...

What makes the Boeing disaster so frustrating is the relative obviousness of the problem in retrospect. Psychologists and economists have a term for this; it’s called the “hindsight bias,” the tendency to see causes of prior events as obvious and predictable, even when the world has no clue leading up to them. Without the benefit of hindsight, the complex causal sequences leading to catastrophe are sometimes impossible to foresee. But in light of recent tragedy, theorists like Perrow would have us try harder anyway. Trade-offs in engineering decisions necessitate an eternal vigilance against the unforeseen. If some accidents are a tangle of unpredictability, we’d better spend more time thinking through our designs and decisions—and factoring in the risks that arise from complexity itself.

...

The increasing complexity of modern human-machine systems means that, depressingly, unforeseen failures are typically large-scale and catastrophic. The collapse of the real estate market in 2008 could not have happened without derivatives designed not to amplify financial risk, but to help traders control it. Boeing would never have put the 737 Max’s engines where it did, but for the possibility of anti-stall software making the design “safe.”

In response to these risks, we play the averages. Overall, air travel is safer today than in, say, the 1980s. Centuries old cathedrals don’t burn, on average, and planes don't crash. Stock markets don’t, either. On average, things usually work. But our recent sadness forces a reminder that future catastrophes require more attention to the bizarre and (paradoxically) to the unforeseen. Our thinking about accidents and tragedies has to evolve, like the systems we design. Perhaps we are capable of outsmarting complexity more often. Sometimes, though, our recognition of what we’ve done will still come too late."

crusso1993

7500 Club Member

View BadgesTampa Bay Reef Keepers

West Palm Beach Reefer

Hospitality Award

Ocala Reef Club Member

MAC of SW Florida

Accidents Don’t Just Happen—They Have to Happen

Our very attempts to stave off disaster make unpredictable outcomes more likely.

Read in The Atlantic: https://apple.news/AxDIeJCtxTKKBNhPSWC_K6w

Something to keep in mind as we work to prevent disasters in our reef tanks. The following is quoted from The Atlantic article:

"Accidents are part of life. So are catastrophes. Two of Boeing’s new 737 Max 8 jetliners, arguably the most modern of modern aircraft, crashed in the space of less than five months. A cathedral whose construction started in the 12th century burned before our eyes, despite explicit fire safety procedures and the presence of an on-site firefighter and a security agent. If Notre Dame stood for so many centuries, why did safeguards unavailable to prior generations fail? How did modernizing the venerable Boeing 737 result in two horrific crashes, even as, on average, air travel is safer than ever before?

These are questions for investigators and committees. They are also fodder for accident theorists. Take Charles Perrow, a sociologist who published an account of accidents occurring in human-machine systems in 1984. Now something of a cult classic, Normal Accidents made a case for the obvious: Accidents happen. What he meant is that they must happen. Worse, according to Perrow, there’s a humbling cautionary tale lurking in complicated systems: Our very attempts to stave off disaster by introducing safety systems ultimately increases the overall complexity of the systems, ensuring that some unpredictable outcome will rear its ugly head no matter what. Complicated human-machine systems might surprise us with outcomes more favorable than we had any reason to expect. They also might shock us with catastrophe.

When disaster strikes, past experience has conditioned the public to assume that hardware upgrades or software patches will solve the underlying problem. This indomitable faith in technology is hard to challenge—what else solves complicated problems? But sometimes our attempts to banish accidents make things worse.

...

What makes the Boeing disaster so frustrating is the relative obviousness of the problem in retrospect. Psychologists and economists have a term for this; it’s called the “hindsight bias,” the tendency to see causes of prior events as obvious and predictable, even when the world has no clue leading up to them. Without the benefit of hindsight, the complex causal sequences leading to catastrophe are sometimes impossible to foresee. But in light of recent tragedy, theorists like Perrow would have us try harder anyway. Trade-offs in engineering decisions necessitate an eternal vigilance against the unforeseen. If some accidents are a tangle of unpredictability, we’d better spend more time thinking through our designs and decisions—and factoring in the risks that arise from complexity itself.

...

The increasing complexity of modern human-machine systems means that, depressingly, unforeseen failures are typically large-scale and catastrophic. The collapse of the real estate market in 2008 could not have happened without derivatives designed not to amplify financial risk, but to help traders control it. Boeing would never have put the 737 Max’s engines where it did, but for the possibility of anti-stall software making the design “safe.”

In response to these risks, we play the averages. Overall, air travel is safer today than in, say, the 1980s. Centuries old cathedrals don’t burn, on average, and planes don't crash. Stock markets don’t, either. On average, things usually work. But our recent sadness forces a reminder that future catastrophes require more attention to the bizarre and (paradoxically) to the unforeseen. Our thinking about accidents and tragedies has to evolve, like the systems we design. Perhaps we are capable of outsmarting complexity more often. Sometimes, though, our recognition of what we’ve done will still come too late."

Kind of a foreboding message but one that tells truth. I like it. I'm not a big believer in sugar-coating or hiding the truth because it may be considered dark or scare someone or hurt their feelings. As a matter of fact, I believe it is our duty to share such messages and help people to determine what they will do with them, where possible. Thanks for sharing!

Kind of a foreboding message but one that tells truth. I like it. I'm not a big believer in sugar-coating or hiding the truth because it may be considered dark or scare someone or hurt their feelings. As a matter of fact, I believe it is our duty to share such messages and help people to determine what they will do with them, where possible. Thanks for sharing!

You're welcome @crusso1993 ! Isn't there a hashtag #truthinreefing (may have this wrong, remember seeing a tag like this from @NY_Caveman )

i recently read a book by Julian Spilsbury - Great Military Disasters.

If you are into history, I recommend reading it. You can see the thinking that led to many of these disasters. Also gives people an insight into generals and commanding an army.

it shows that is is not just modern thinking of playing averages and trusting what is not there.

It more shows that human nature is to do these things.

The book starts with Mount Tabor 1125 BC and ends with Dien Bien Phu 1954.

If you are into history, I recommend reading it. You can see the thinking that led to many of these disasters. Also gives people an insight into generals and commanding an army.

it shows that is is not just modern thinking of playing averages and trusting what is not there.

It more shows that human nature is to do these things.

The book starts with Mount Tabor 1125 BC and ends with Dien Bien Phu 1954.

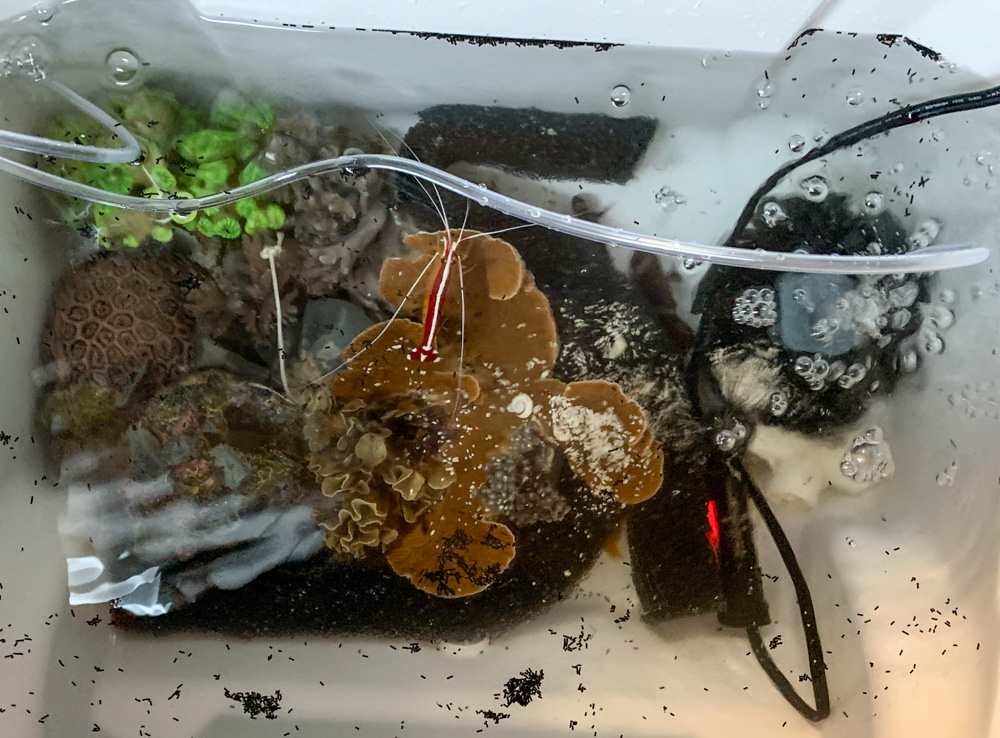

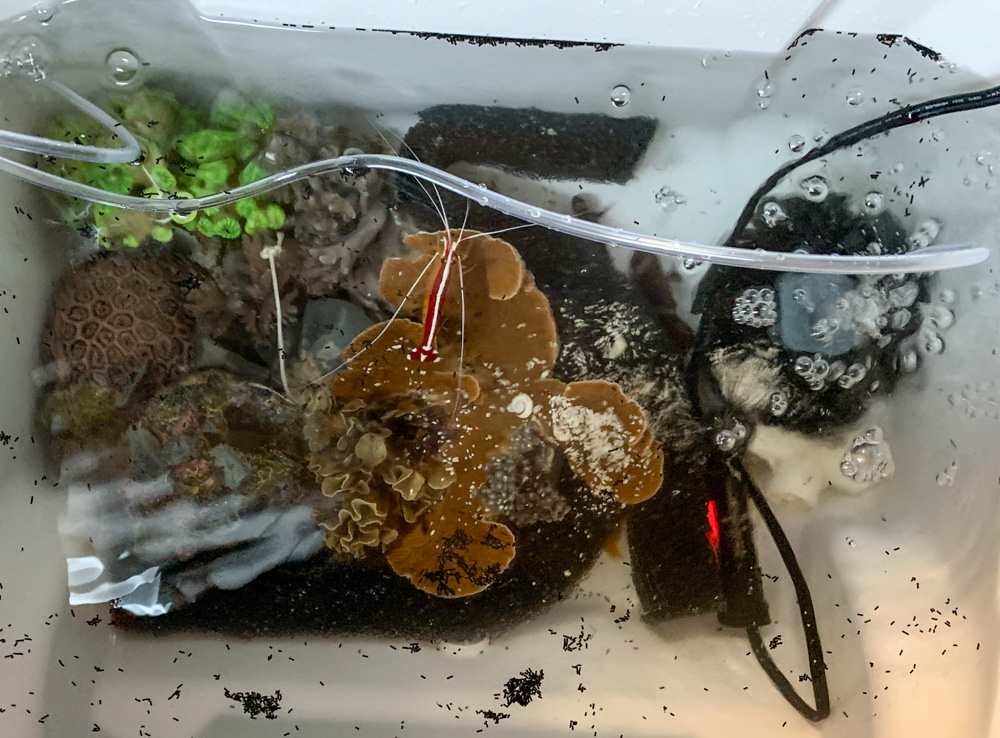

Critters are in the coolers. How they will weather the next 3 days and 2000 miles is an unknown; moving the aquariums on top of moving a whole household pegs the exhaustion meter for sure.

crusso1993

7500 Club Member

View BadgesTampa Bay Reef Keepers

West Palm Beach Reefer

Hospitality Award

Ocala Reef Club Member

MAC of SW Florida

Critters are in the coolers. How they will weather the next 3 days and 2000 miles is an unknown; moving the aquariums on top of moving a whole household pegs the exhaustion meter for sure.

Good luck!

Hopefully everything turns out well, safe travels for you as well.

crusso1993

7500 Club Member

View BadgesTampa Bay Reef Keepers

West Palm Beach Reefer

Hospitality Award

Ocala Reef Club Member

MAC of SW Florida

Happy you made it in this fashion...

Glad you made it safe and the freshwater is looking great.

Similar threads

- Replies

- 9

- Views

- 148

- Replies

- 6

- Views

- 161

- Price: $500

- Shipping NOT Available

- Replies

- 3

- Views

- 179